RT-H: Action Hierarchies Using Language

Abstract

Language provides a way to break down complex concepts into digestible pieces. Recent works in robot imitation learning have proposed learning language-conditioned policies that predict actions given visual observations and the high-level task specified in language. These methods leverage the structure of natural language to share data between semantically similar tasks (e.g., "pick coke can" and "pick an apple") in multi-task datasets. However, as tasks become more semantically diverse (e.g., "pick coke can" and "pour cup"), sharing data between tasks becomes harder and thus learning to map high-level tasks to actions requires substantially more demonstration data. To bridge this divide between tasks and actions, our insight is to teach the robot the language of actions, describing low-level motions with more fine-grained phrases like "move arm forward" or "close gripper". Predicting these language motions as an intermediate step between high-level tasks and actions forces the policy to learn the shared structure of low-level motions across seemingly disparate tasks. Furthermore, a policy that is conditioned on language motions can easily be corrected during execution through human-specified language motions. This enables a new paradigm for flexible policies that can learn from human intervention in language. Our method RT-H builds an action hierarchy using language motions: it first learns to predict language motions, and conditioned on this along with the high-level task, it then predicts actions, using visual context at all stages. Experimentally we show that RT-H leverages this language-action hierarchy to learn policies that are more robust and flexible by effectively tapping into multi-task datasets. We show that these policies not only allow for responding to language interventions, but can also learn from such interventions and outperform methods that learn from teleoperated interventions.

Video

Describing Robot Motions in Language

Language encodes the shared structure between similar tasks

Language-conditioned policies in robotics leverage the structure of natural language to share data between semantically similar tasks (e.g., "pick coke can" and "pick an apple") in multi-task datasets. But as tasks become more semantically diverse (e.g., "pick the apple" and "knock the bottle over" below), sharing data between tasks is much harder.

Our insight is to teach the robot the language of actions

To encourage data sharing, our insight is to describe low-level motions with more fine-grained phrases like "move arm forward" or "close gripper". We predict these language motions as an intermediate step between high-level tasks and actions, forcing the policy to learn the shared motion structure across tasks.

Language Motions enable easy intervention in language space

Language motions enables a new paradigm for flexible policies that can learn from human intervention in language. We can provide corrective language motions to the policy at test time, and it will follow these motions to improve on the task. Then, we can learn from these interventions to improve the policy downstream.

Method

Our method RT-H builds an action hierarchy using language motions: it first learns to predict language motions, and conditioned on this along with the high-level task, it then predicts actions, using visual context at all stages.

Experiments

We evaluate RT-H on (1) how well it learns from diverse multi-task datasets, (2) how well it learns from intervention compared to teleoperated intervention methods, and (3) how well it generalizes to new scenes, objects, and tasks.

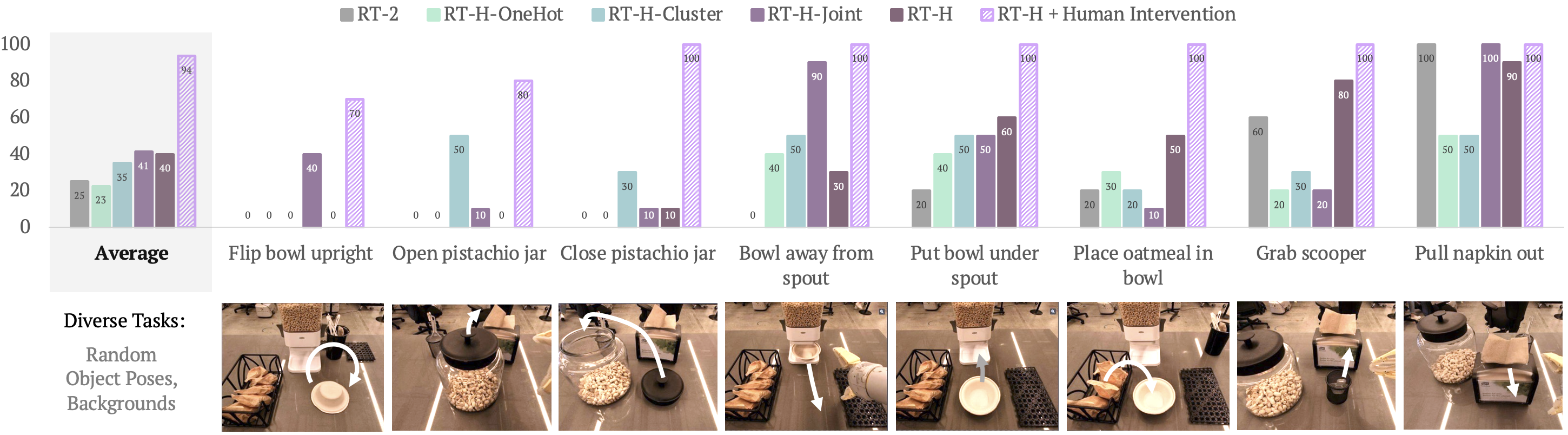

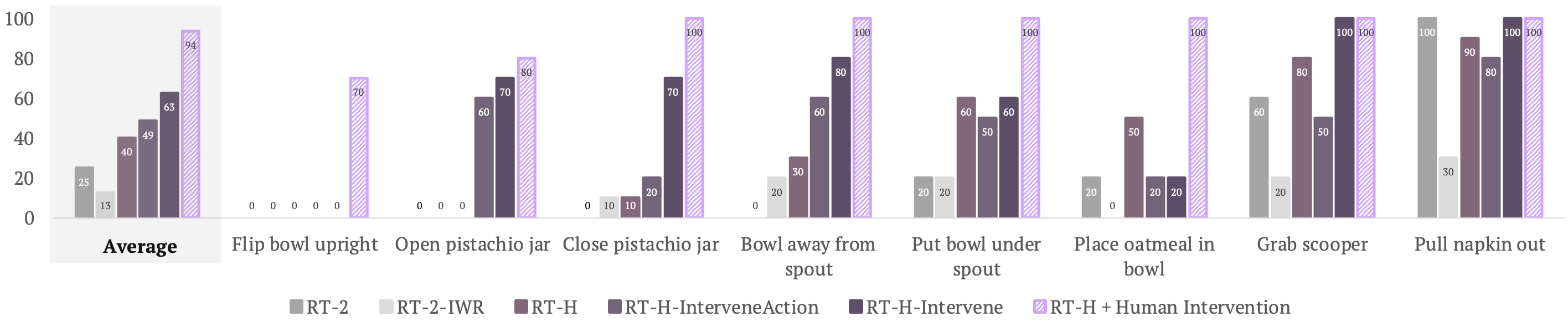

Training on Diverse Tasks

RT-H outperforms RT-2 by 15% on average, getting higher performance on 6/8 of the tasks. Replacing language with class labels (RT-H-OneHot) drops performance significantly. Using action clusters via K-Means instead of the automated motion labeling procedure leads to a minor drop in performance as well (RT-H-Cluster), demonstrating the utility of language motions as the intermediate action layer.

Contextuality in RT-H evaluations.

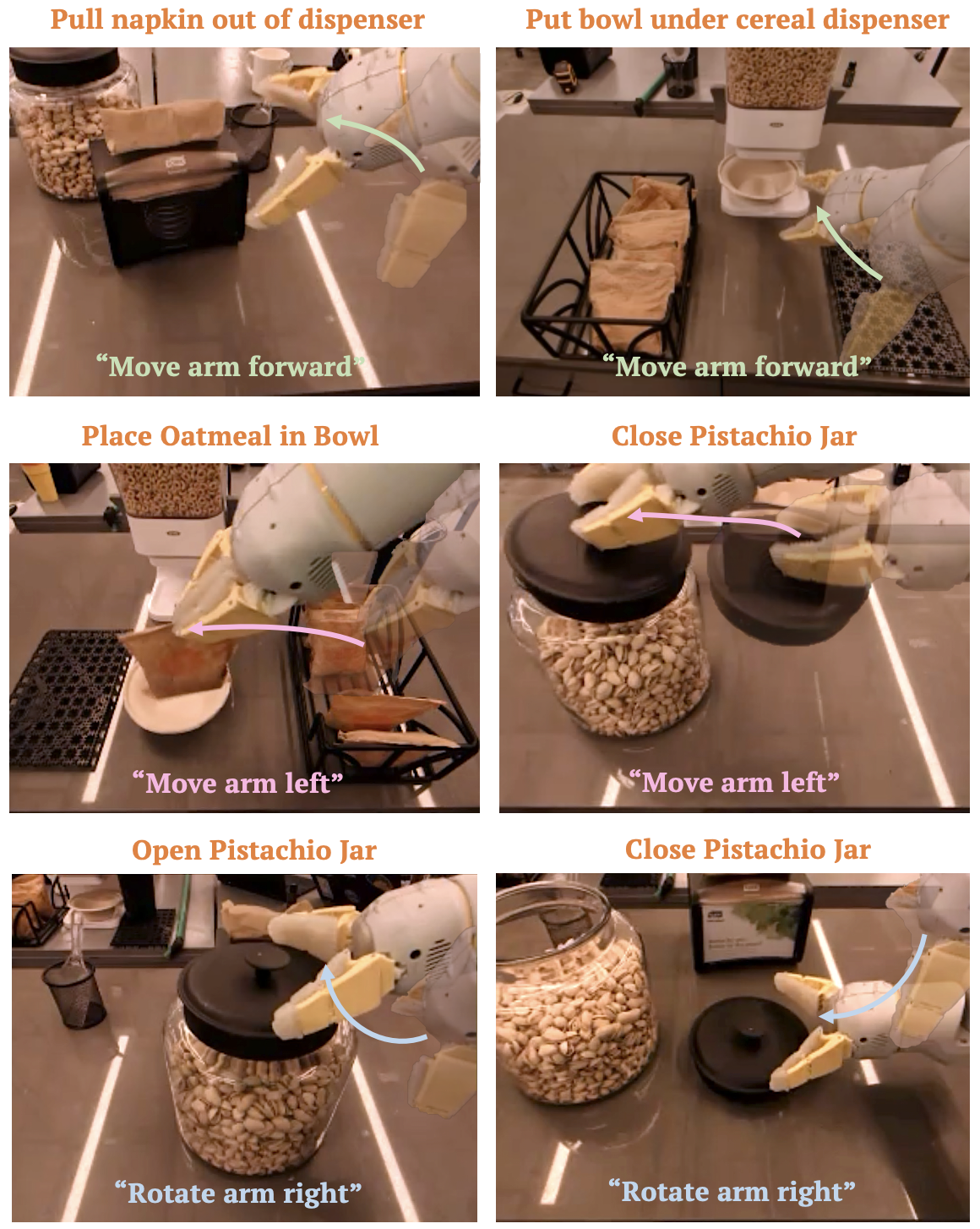

Language motions depend on the context of the scene and task. For each row, the given language motions ("move arm forward", "move arm left", "rotate arm right") manifest with different variations (columns) depending on the task and observation, such as subtle changes in speed, non-dominant axes of movement, e.g., rotation for "move arm forward", and even gripper positions.

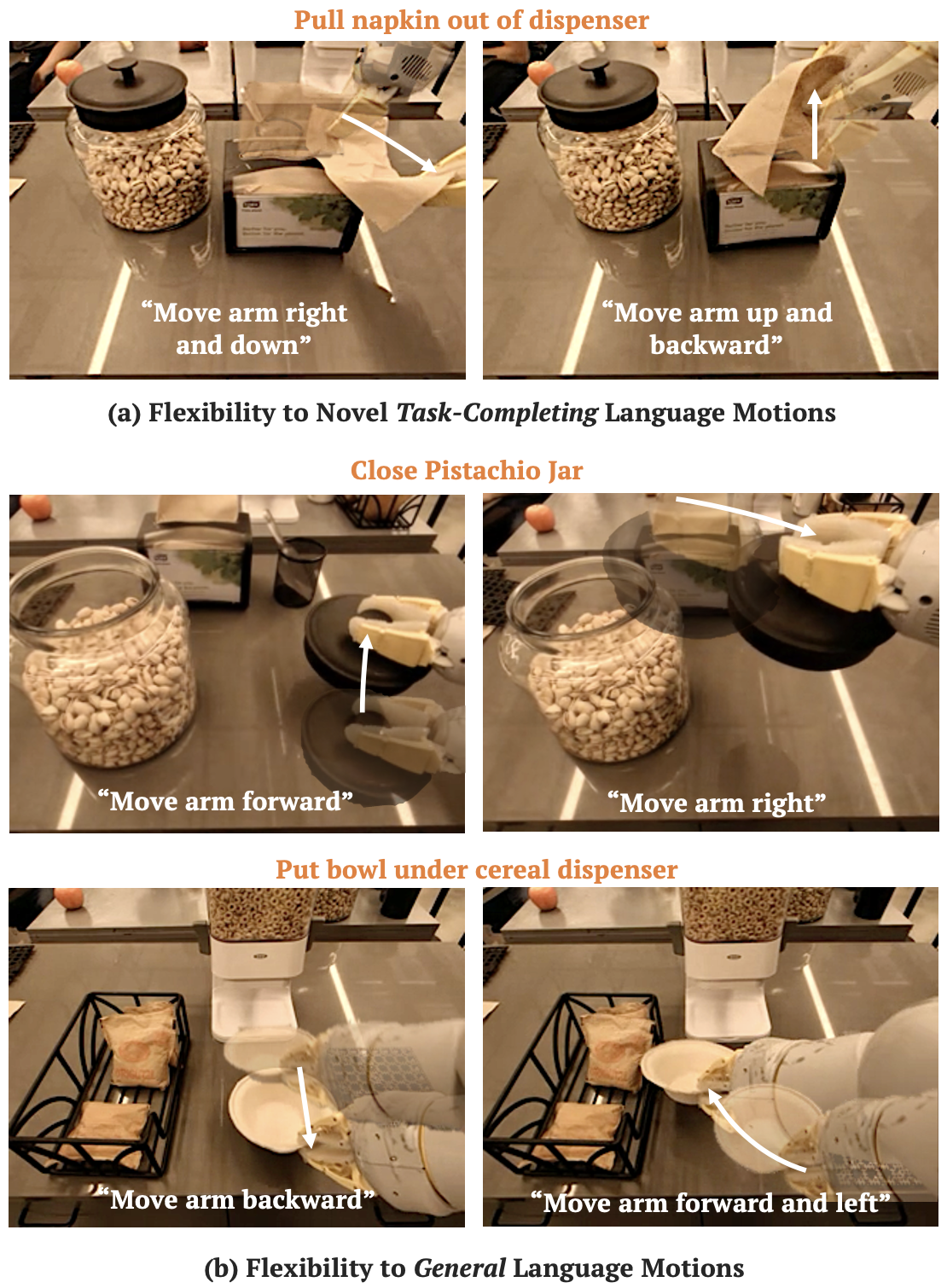

Flexibility in RT-H evaluations.

In the top row (a) we correct RT-H using two different task-completing language motions for pulling the napkin out of the dispenser, either "right and down" or "up and backward". For the bottom two rows (b), we demonstrate that RT-H is often flexible even to completely out-of-distribution language motions for a task.

RT-H Rollouts

Pull Napkin from Dispenser

Close Pistachio Jar

Training on Interventions

We see RT-H-Intervene both improves upon RT-H (from 40% to 63% with just 30 intervention episodes per task) and substantially outperforms RT-2-IWR, suggesting that language motions are a much more sample efficient space to learn corrections than teleoperated actions.

RT-H

Open Jar - Before

RT-H-Intervene

Open Jar - After

Before intervention training, RT-H moves its arm too low to grasp the jar lid. To correct this behavior, we can specify a correction online to tell the robot the move its arm up before hitting the jar. Training on these interventions, we find that RT-H-Intervene on the right is now better at opening the jar.

Move Bowl Away from Spout - Before

Move Bowl Away from Spout - After

Similarly in this example, before intervention training, RT-H does not move its arm close enough to the bowl to grasp it. To correct this behavior, we can specify a correction online to tell the robot the move its arm forward more before it grasps. Training on these interventions, we find that RT-H-Intervene on the right is now better at moving the bowl away from the spout.

Generalization

Next, we test the ability of RT-H to generalize to new scenes (different backgrounds, lighting, flooring), objects, and novel tasks (with human intervention)

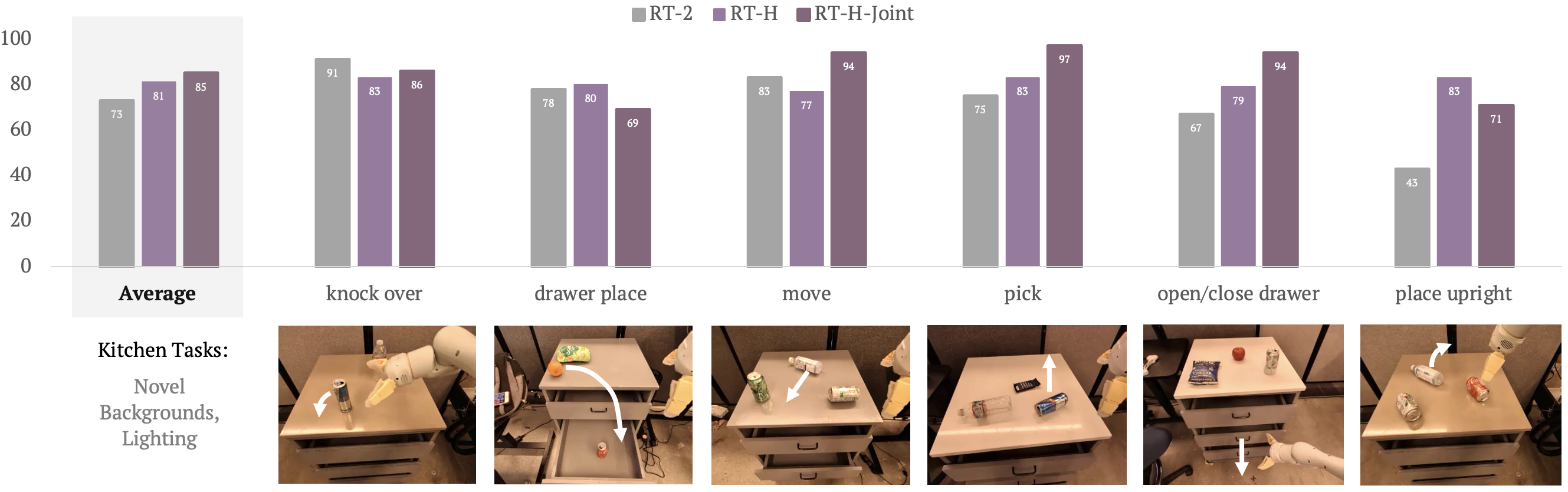

New Scenes

We see that RT-H and RT-H-Joint (methods with language motion based action hierarchy) generalize better to novel scenes by 8-12% on average compared to RT-2.

New Objects

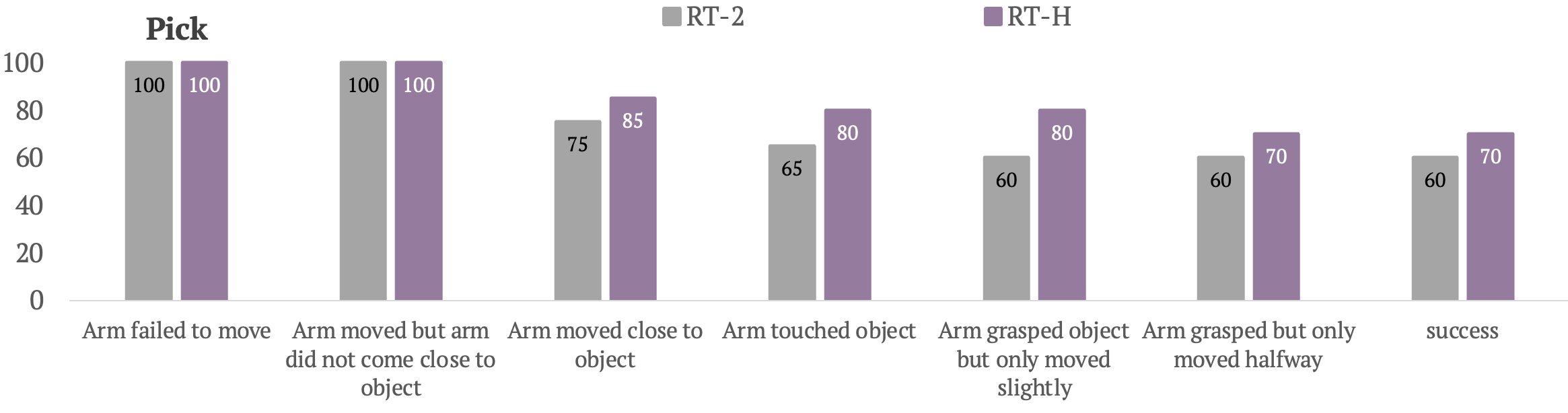

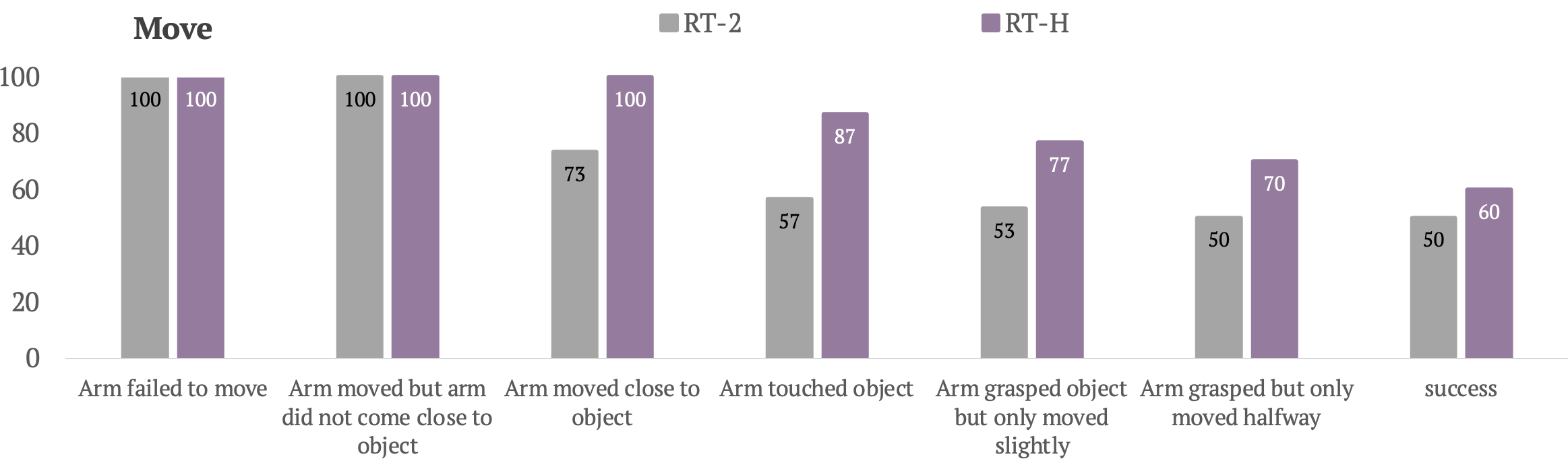

We see that RT-H generalizes better to novel objects by 10% on average compared to RT-2 (rightmost "success" bars), and does even better at earlier stages of the task compared to RT-2.

New Tasks with Intervention

Unstack the Cups (w/ human intervention) 2x

Place Apple in Jar (w/ human intervention) 2x

While RT-H cannot zero-shot perform these novel tasks, it shows promising signs at learning the shared phases of novel tasks. RT-H can unstack the cups (left) with intervention only after the cups have been picked up, and similarly it can place an apple into a jar with intervention only after picking up and moving the apple close to the jar. This highlights the promise of RT-H to generalize with less data than flat models.

Citation

Acknowledgements

We thank Tran Pham, Dee M, Utsav Malla, April Zitkovich, and Elio Prado for their contributions to robot evaluation.

The website template was borrowed from Jon Barron.